What Is AI Risk: Everything You Need to Know

AI is growing fast. We now see it in instant-response chatbots that answer questions and in driverless cars that move through busy streets. This technology is changing how we work, live, and communicate. The progress looks magical, yet it is also highly practical.

However, ease of use comes with real risks. AI systems can spread fake news, reinforce discrimination, or cause unsafe driving. These problems show that even neutral-looking systems can create harm. This article examines the risks of AI and highlights the steps we must take to reduce them.

What Is AI Risk?

AI risk means the problems that can happen when we use artificial intelligence. These problems can include spreading false information, unfair hiring, workplace changes, accidents, or privacy issues. AI risk also covers security threats like hacking or leaking private data. Put simply, AI risk is the danger that AI can pose if people do not manage or control it well.

Common Types of AI Risk

AI systems come with many risks. They can show bias, spread misinformation, cause job losses, or fail to follow safety rules. These concerns highlight the real challenges that AI brings to our world today.

1. AI Bias in Hiring

AI bias in hiring is the phenomenon where recruitment tools built on older data reiterate or magnify the prejudice that exists. Rather than biasing the competition, these tools can silently disqualify competent individuals due to their gender, ethnicity, or other demographics.

The Amazon Case

The Amazon AI Recruiting Tool is one of the most well-known examples of AI bias in hiring. It was designed to screen resumes and automate the hiring process. However, the tool was trained on past hiring data, which included more male applicants than female. As a result, it flagged resumes that mentioned “women” or involvement with women’s issues.

This case highlights the problem of AI bias in hiring tools. Even though Amazon eventually scrapped the tool, it remains a key example of how poor-quality data can lead AI systems to make unfair decisions.

Bias Against Hairstyles

Bias in AI goes beyond text. Research on recruitment tools has shown that some hairstyles worn by Black women were labeled as “unprofessional.” These examples show how AI can reinforce discrimination based on appearance and continue harmful stereotypes in hiring.

Why AI and Bias Matter in Hiring

These ai recruiting bias issues matter because jobs shape people’s futures. It impacts diversity and equity when technology overlooks competent applicants because of their gender or ethnicity. The Amazon AI hiring bias incident and hairstyle instances illustrate why every employer needs to periodically test and audit their AI hiring systems.

2. AI-Generated Fake News

AI fake news is content created by artificial intelligence in the form of text, images, or videos. This content looks real but is actually false or misleading. AI tools can generate such material in just seconds. They can also do it at a very low cost. Because of this, social media and news platforms can quickly become flooded with large amounts of false information.

Political Manipulation Examples

In California, Governor Gavin Newsom created and shared satirical images of Donald Trump on a fake Time magazine cover using AI technology. While these images were created as a joke, they portray a serious reality about AI-generated images fusing truth with fiction.

Like other generative art, AI-generated images raise concerns about misinformation. Such ai generated fake news can cause serious harm to a person’s reputation or influence voters when shared without context.

Impact on Children’s Media

Fake content affects more than politics. On YouTube Kids, AI has produced “cartoons” with distorted stories, edited images, and even suggestive themes. This kind of material is troubling for children. They cannot easily tell the difference between what is real and what is not. As a result, they may face serious risks. Parents feel alarmed because inappropriate content can appear as harmless entertainment.

Why Fake News Matters

Misinformation is causing people to lose trust in the media, governments, and even personal relationships. Many users struggle to tell what is real and what is false when AI systems flood platforms with disinformation. This problem is not only technical. It is also a social issue that raises serious concerns about AI safety.

How to Respond to AI-Generated Fake News

- Platforms can build more efficient detection systems that can flag and label AI content.

- Schools can teach digital literacy for children and adults to analyze content they see online.

- Legislation can control the use of deceptive AI during elections and in children’s media.

Altogether, these steps reduce the dangers of artificial intelligence tied to fake information.

3. Job Replacement

One of the major effects of AI is the decline of entry-level jobs. A Stanford study shows that the number of secretaries, junior accountants, and marketing assistants has dropped by 6–13% since late 2022. Many of these roles are now being replaced by automation. These positions once served as entry points into various career paths, but that gateway is quickly shrinking.

The Decline of Traditional Office Work: Take the office secretary as an example. Tasks like scheduling, filing, and handling correspondence have become automated thanks to AI tools and virtual assistants. It is clear that AI is not just changing work—it is eliminating entire types of jobs.

Economic and Social Consequences: Job losses have real effects on people and families. They create financial strain and widen inequality. The decline of entry-level positions also limits opportunities for younger workers to advance in their careers. This generational gap is another serious risk that AI poses in the workforce.

Managing the Transition: Strong AI risk management is essential. Companies and governments should focus on retraining programs, upskilling initiatives, and support systems to ensure smooth career transitions. Without these measures, workers may face severe economic and social challenges.

4. AI Safety Concerns

Tesla’s self-driving car accidents show what can happen if people rely too much on AI systems. Research shows Tesla’s Autopilot has caused serious accidents. It happens when the system misreads road conditions or does not react in time. These problems prove that even advanced AI still needs humans to watch it regularly. The risk gets much higher when drivers trust automation more than their own judgment.

AI Risks for Teenagers

Teens are one of the most vulnerable groups to the risks of AI. Chatbots and digital companions can help reduce loneliness and provide emotional support. But reports about their dangers are worrying. Some AI tools made to interact with teenagers have been linked to self-harm and thoughts of suicide.

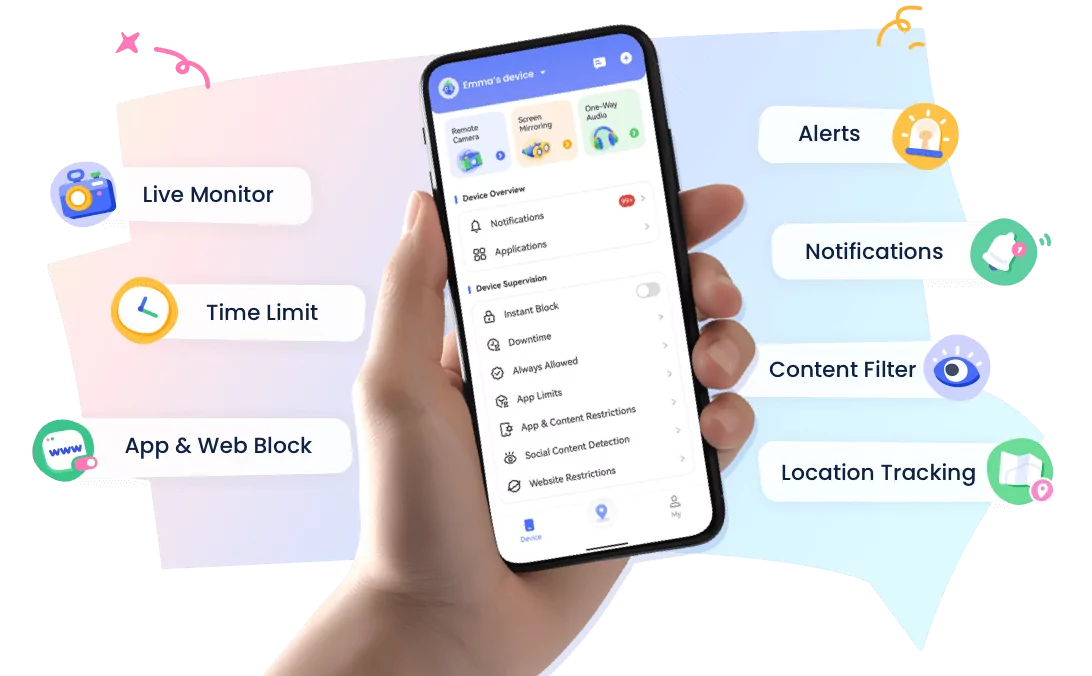

AirDroid Parental Control helps to minimize the exposure of children to self-harm AI by helping parents to control and manage their children’s devices. It helps parents to control exposure to unnecessary apps, distractions, and harmful AI interactions, while helping to guide healthier use of devices.

How Focus Mode Helps Reduce AI Risks for Teenagers:

- Blocks dangerous or addictive apps, keeping teenagers safe from AI content that could cause harm.

- Helps teens stay focused on their studies during online classes or while completing assignments.

- Encourages children to maintain a good night’s sleep by preventing late-night screen usage or playing video games.

- Assists children in controlling their focus time independently, which cultivates self-discipline and self-control.

By working with their children to create a focus rule, parents turn digital wellness from control into cooperation. This approach helps teenagers develop responsibility while managing the boundaries set by AI.

Why AI Risk Matters

Understanding the risks and effects of AI is important because it touches all parts of life.

- Fairness: In lending, hiring, and housing, using biased systems can take away opportunities from many people.

- Trust: AI-generated fake news lowers trust in online information. It makes it harder for people to agree on what is true.

- Work: AI is changing jobs. Millions of workers may need retraining, especially in industries that are automating fastest.

- Safety: Uncontrolled AI can cause serious risks. These include car accidents and harmful behavior from chatbots.

AI Risk Management Strategies

Mitigating risks of AI requires many approaches, such as technology, policy, and teaching.

1. Audit for fairness

Organizations need to check their systems often for bias. They should look at the results. They should also change training data to reduce unfair outcomes in AI hiring and other areas.

2. Promote transparency

It is important to show how an AI system made a decision. It is also important to explain what factors influenced it. Clear explanations make the system accountable. They also help people trust it.

3. Detect and label synthetic content

Platforms need to develop systems capable of identifying and tagging AI-generated content in an effort to mitigate the effects of AI-generated disinformation.

4. Reskill and retrain workers

Platforms need tools to find and mark AI-made content. This helps reduce the spread of false information from AI.

5. Design safe systems for families

The risk of losing jobs is growing. Governments and businesses should make training programs. These programs help workers deal with new challenges.

6. Strengthen laws and policies

Governments must control the use of AI technology in elections, healthcare, and the protection of children. Laws must keep pace with technology.

7. Build public AI literacy

Everyone should understand AI and know its limits. People should also learn to judge content carefully, especially online. Thinking clearly is one of the best ways to protect against AI risks.

Conclusion

AI offers many opportunities but also brings many challenges. The benefits include speed, efficiency, and productivity. The risks are real. They include biased hiring, job loss, fake news, and safety issues. Audits, rules, and tools like AirDroid Parental Control can help manage these risks. This way, AI can improve society while reducing harm.

FAQs

Leave a Reply.