What is AI Privacy: Everything You Need to Know

AI is transforming nearly everything in our lives, from the way we search online and interact with our data to where we park, how we heat our homes, where we work, and how we shop. These expanding capabilities increase the privacy concerns.

What happens when artificial intelligence knows too much? This article is an in-depth exploration of the AI and privacy problem and the risks involved. We discuss the implementations of AI privacy protection, future legislation, and ways families can protect themselves.

What is AI Privacy?

AI privacy is the protection of personal information when AI systems exist to collect, process, or otherwise generate insights from the data. AI in contrast to traditional tools, which only store or share information, can:

- Understand patterns using training data.

- Predict behaviors or traits of individuals.

- Create potential outputs that could accidentally reveal sensitive information.

With AI, privacy matters become much more complex, because technology can reveal secrets, not even disclosed. That is, something like the person's health status or the activity of being at a location can be inferred based on seemingly harmless pieces of information.

AI privacy issues occur at multiple levels:

- Collection: Privacy involves managing what data is collected.

- Training: Privacy involves ensuring that models don’t memorize sensitive information.

- Inference: Privacy involves preventing unwanted gaps or non-requested predictions.

- Outputs: Privacy avoids leaks or gaps of personal information.

- Control: Privacy involves giving people the ability to delete or limit what data has been gathered, or how it is used.

AI privacy aims to strike a balance between the need for innovation and preserving a future in which people can control their data and information about their lives.

Why It’s Different from General Data Privacy

Traditional data privacy has depended on databases that shield documents, restrict access, and destroy records. For low-level storage, it works; for AI, not really, so it's important to know what data privacy is in AI.

AI systems are not only memorized, they get trained. They can learn, predict new patterns, and generate these outputs that disclose personal information. This is why ai and data privacy, however, is more nuanced, in need of more stringent laws and measures.

Comparison Sheet: Traditional vs. AI Privacy

| Aspect | Traditional Data Privacy | AI Privacy |

|---|---|---|

| Focus | Protect raw files and databases | Protect data and how models learn and output information |

| Risks | Hacks, leaks, over-collection | Inference risks, profiling, memorization, output leaks |

| Deletion | Remove data from storage | Retrain or unlearn so AI no longer reflects that data |

| User Rights | Access, correct, delete | Same rights plus transparency on automated predictions |

| Tools | Encryption, access logs | Differential privacy, federated learning, and unlearning |

| Examples | Email database breach | AI predicting health conditions from browsing habits |

So, what does AI data privacy even mean? This refers to the measures used to protect the original data as well as the knowledge derived by AI from the data.

AI Privacy Concerns and Risks

AI technologies come with a number of additional risks that traditional security measures are simply not up to dealing with. Some of the most critical ai data privacy concerns are:

- Data misuse: Companies might collect personal data from online sources without proper authorization.

- Unauthorized access: Hackers taking advantage of loopholes can result in AI privacy breaches, affecting both raw datasets and the behaviors of associated models.

- Profiling: AI can anticipate certain traits, for example, health risks and political leanings, leading to negative traits.

- Output leaks: Due to extensive training on the raw data, sometimes large models are prone to repeating some of the training data in their outputs.

Real AI Privacy Issues Examples

Clearview AI Facial Recognition

Clearview established a giant database of images of faces by scraping billions of photographs from the internet. Regulators and courts have mounted legal challenges to its practices, and in 2020 a clearview ai data breach disclosed its client list along with sensitive data.

Strava Heat Map

A publicly accessible fitness app was found to have mistakenly disclosed sensitive data contained in its heat map. That used to automatically log exercise details and show it, exposing potentially incriminating patterns around military bases and other secure installations.

Chatbot Bug (2023)

ChatGPT had a bug that exposed chat titles and partial billing information from other users of the service. This incident was one of the more memorable AI privacy events of the last couple of years.

Inference Attacks

One of the exposed privacy concerns of ai is the capability of AI to retrace and recreate inputs. AI can even recognize whether user data has been used in its training, and leverage alternative methods to traditional defences to protect privacy.

These ai privacy issues examples illustrate breaches of privacy in the modern digital landscape. The dangers go beyond the concept of files being hacked. The worry is how these AI systems parse and reveal the data, showing details that users can’t fathom.

AI and Data Protection

The combination of normal security measures and AI-specific strategies is necessary for Strong AI and data protection. Organizations will need to secure their databases, of course, but also need to ensure that AI doesn’t inadvertently spill or abuse what it has learned.

1. Core Strategies for AI Privacy

- Federated Learning (Google Gboard): Retains raw data on devices. Instead of personal texts being sent to a server, the model updates on a global scale after learning on a local level.

- Differential Privacy (Apple): Adds mathematical “noise” to obscure individual data points within large populations. As an example, Apple implements this technique in predictive typing and Photos.

- Encryption & Secure Hardware: Ensures sensitive data is safeguarded both in storage and during transfer. Android’s Private Compute Core secures AI processes by isolating them from the web.

- Leak Testing: Membership inference and inversion inference are methods that determine whether a model generates inferences that can detect the leakage of training data.

- Machine Unlearning: Enables AI to “forget” data upon user deletion requests. This shields models from being influenced by data that has been removed.

2. Real-World Practices

- Apple implements differentiated privacy techniques to protect specific user data while still analyzing global patterns of usage and ensuring individual behavior is masked.

- Google implements federated learning in Gboard, which allows improvement of typing models without the need to upload raw keystrokes to its servers.

These examples illustrate how major companies incorporate AI privacy and security into convenient everyday features.

Future of AI Privacy

The future of AI privacy will be impacted by changes in the law as well as how fast technology develops. As AI becomes widely used in the healthcare, finance, education, and communication sectors, it will be essential to protect sensitive information for the sake of public confidence and reduce AI privacy risks.

1. Legal Developments

- GDPR (Europe): Gives people fundamental rights to access, delete, and object to profiling. These rules will continue to influence global standards.

- EU AI Act (2024): Sets forth a risk-based approach that bans the use of certain AI models deemed too risky; mandates transparency for models that impact the rights of citizens.

- S. State Laws (like CCPA in California): Grant users with more rights, mandate companies to inform users on AI-enabled services and their use of personal data.

- FCC Regulations: AI-powered robocalls are banned, showcasing that regulators are now focused on emerging risk areas.

2. Technological Advances

On the technical front, researchers are focusing on:

- More accurate differential privacy techniques that maintain privacy on an individual level.

- Scalable methods for machine unlearning that allow models to “unlearn” portions of user data on request.

- The ability for companies to train AI systems without revealing personal information through synthetic data generation.

- The creation of model cards and datasheets that disclose the transparency over the sources, risks, and limitations of the training data.

The combination of the policies and the outlined technological developments aims to establish a future in which data privacy is not an afterthought but integrated by design. How data privacy is balanced with technological innovation and user safety will shape the future of AI for the next decade.

Bonus: Protect Your Family for Safer Digital Privacy

AI privacy isn’t just about international policies and businesses; it impacts families on a daily basis. Nowadays, children access learning tools, games, and applications powered by AI. Unfortunately, many children and teenagers aren’t familiarized with the concept of privacy, and as such, the amount of data they share is staggering. To protect themselves, families require more than just knowledge; they require actionable strategies.

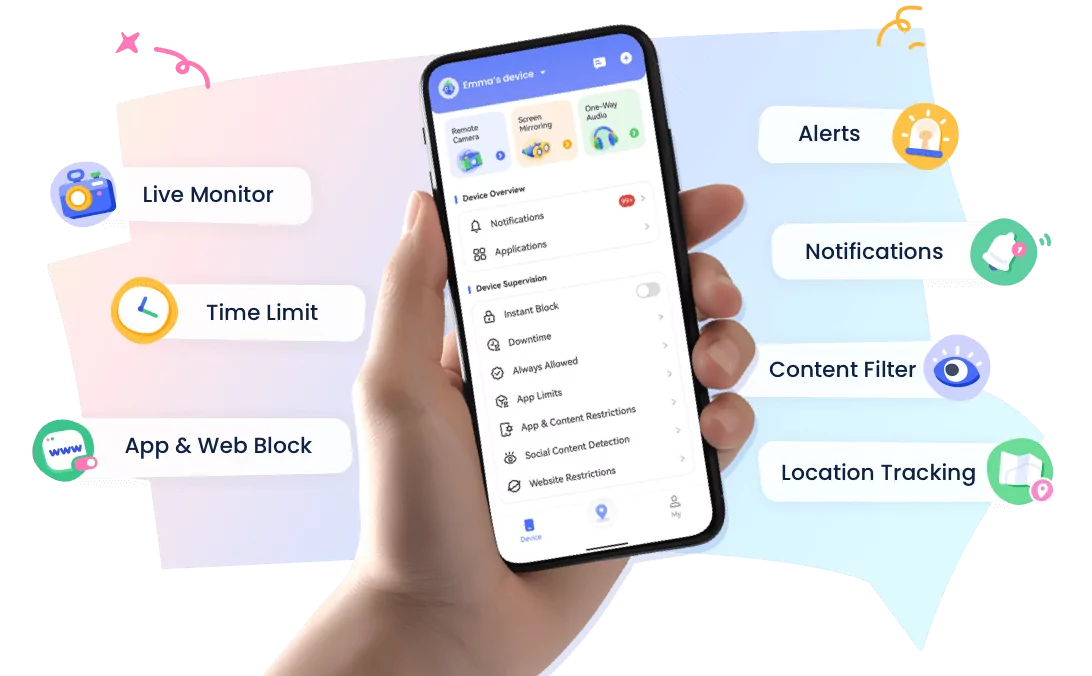

AirDroid Parental Control is one of the best ways parents can manage digital privacy at home. It offers monitoring and other features that help mitigate the dangers posed by AI-powered applications:

1. Real-Time Monitoring

Helps parents monitor their child’s device screens in real-time, reducing the risk of AI-powered apps collecting sensitive data without the user's consent.

2. Website and App Blocking

Restricts access to harmful/untrusted AI-enabled apps and websites so that children do not unintentionally get tracked, profiled, or exposed to AI systems that collect private information.

3. Screen Time Management

Restricts device usage so that children are less likely to be exposed to constant AI-driven recommendations and algorithms attempting to influence their behavior and habits or encouraging unnecessary data that could be shared on the internet.

4. Location Tracking and Alerts

AirDroid offers real-time GPS location tracking and immediate alerts for geofences so parents can always keep an eye on where their children are.

5. Focus Mode

It allows parents to limit screen time, block distracting or potentially unsafe apps, and encourage mindful device use. It also helps parents identify AI-related privacy concerns, potential privacy invasions, inappropriate usage, or aggressive data collection at an early stage.

With AirDroid Parental Control, families can now establish a protective barrier around their children’s online activities. It has the potential to turn AI from a potential privacy danger into an everyday tool that is much, much safer and easier to manage.

Conclusion

The growing use of AI technologies has highlighted the issues of privacy and the need to protect sensitive information more than ever. AirDroid Parental Control demonstrates how modern technology can be utilized for proactive decision-making. Through the combination of strong protective measures and innovation, we can foster a safe environment that supports technological growth while upholding user privacy.

FAQ’s

1. Can AI truly delete my data if I request it?

Yes, but it’s complex. This typically involves retraining or machine-unlearning the model so that the model will no longer be indicative of your data.

2. Is anonymized data always safe for AI training?

No. The Netflix Prize case proved that anonymized datasets are often re-identifiable with public data, which means they are not always safe.

3. How do I check if an AI app respects privacy?

Privacy policies are often the best place to find this. A good example is the character ai privacy policy, which details the usage and storage of conversations for training purposes.

4. What steps can families take for safer AI use?

Refrain from sharing too much personal information, do not allow apps to exert too much permission, and lessen exposure with tools such as AirDroid Parental Control.

Leave a Reply.