AI Scams Are Targeting Families — Here's How to Stay Safe!

"Hi Grandma. Yeah, I got in trouble here. The police say they need some money to release me, or they're going to keep me in jail."

Have you ever gotten a text or call just like that? It sounded like your loved one was asking for help. But the twist? Many of these are AI scams -- schemes where scammers use artificial intelligence tools to deceive their target.

These scams aren't just theoretical! In 2025, a woman was conned out of $15,000 from an AI voice scam where her daughter's voice was cloned.

Families, especially elderly and kids, are becoming easy targets of AI scams. But don't fret! Here, we are going to expose some common AI scam techniques and let you know how you and your family can stay safe.

Why Families are Becoming Targets for AI Scams

You might be thinking AI scammers' top targets were financial institutions or individuals seeking romance or jobs. But now, they've shifted their attention to families. And the reasons for that include:

1Kids and Elderly are Vulnerable

Since the level of cognitive, many young users may struggle to recognize the reality of a scammer's story. On the other hand, elders are not familiar with the internet and are more trusting to urgent requests, especially if they hear what sounds like a loved one's voice.

All these make it easier for scammers to trick them with fake websites or AI-generated calls.

2Easy Data Access

Family data, such as personal phone numbers and photos, is much easier to access for scammers than anything else.

For instance, when you share your family videos on social media, scammers can scrape the image, voice, and other relevant data about not only you but also your family from it.

Likewise, if your family address or phone number is on social media platforms, people-search websites, or packages that you threw away, scammers can get hold of it and use it for scams.

3High Trust within Families

It is natural for family members to trust one another. After all, blood is thicker than water. Let's assume you get a call from your child claiming to have been involved in an accident and needing urgent money to get help. At that point, you'll quickly rush to act without hesitation because it is your child and you trust him. However, many AI fraudsters understand this vulnerability, and they've now begun to exploit it to deceive families.

4Huge Family Income

A small amount of money doesn't satisfy Al scammers. As such, they are always after big cashout. Since family finances are always a conglomerate of multiple incomes, scammers believe targeting families will give them a golden opportunity for a larger payoff.

Common AI Scam Types & Scenarios in Families

Now that you know why scammers are targeting families, let's quickly look at the common AI scam types and scenarios in families so you can spot them.

2.1 Voice Cloning Scam

When scammers obtain a victim's voice either through social media videos or phone call recordings, they use AI to create a new speech that sounds just like your loved one's voice. The process of creating an identical speech to trick victims and ask for urgent money transfers is known as an AI voice cloning scam.

Scenario:

"Mom, my car just hit a child now. I need a sum of $8k right now to cover hospital bills and damages, otherwise, they won't let me go."

Scammers impersonate your child or older parents and mimic their voices with crying to ask for urgent medical help or money in a fake emergency or kidnap.

Real-life Cases:

A real-life example of this is the case of Anthony, who received a call that sounded exactly like his son, claiming he had knocked down a pregnant woman and needed $25,000 for bail. Out of fear, he sent the money, only to later discover that his son's voice had been cloned and he had been scammed.

2.2 Deepfake Video and Image Scam

The Deepfake scam goes beyond voice cloning. Thanks to the machine learning algorithms, scammers now generate fake images and videos to impersonate and deceive their victims.

During this scam process, scammers feed AI models with real photos or videos of a target's loved ones, authorities, or medicare representatives. The AI then learns their facial expressions and voice to create highly realistic fakes.

Scenario:

Frauds obtain your family's images or videos to create fake videos. Then, they use the deepfake video to gain your trust and trick you into giving them money.

Another scenario is that scammers may make up a fake video showing your daughter or son tied up, crying, begging for help. And they demand ransom money to release her.

What's worse, they may bypass facial recognition in financial accounts and make transfers if they can get your bank details.

Real-life Case:

In Utah, scammers tricked a Chinese exchange student into isolating himself and staged it to look like a kidnapping. They then sent fake photos to his family, demanding $80,000.

2.3 AI-powered Phishing Scam

In the AI-powered version, AI tools are used to mimic a target's loved one's texting style so that craft convincing emails or messages to trick victims into revealing sensitive information or sending money.

Scenario:

Fraudsters might send messages that look exactly like your child's or your parent's texting style, requesting urgent money.

Or even, AI scammers generate your child's school or a nursing home newsletter to ask you to pay the necessary fee via a link. The payment link steals their credit card info.

Real-life Case:

A woman received messages from someone posing as her daughter in London, claiming she'd lost her phone and urgently needed £1,600 for work. Believing it was real and based on her daughter's habits, she paid—only to later discover it's a scam.

How to Spot AI Scams to Avoid Being Scammed

Since you're now aware of the common AI-driven techniques fraudsters use to scam their victim, let's look at how to spot these tricks to avoid being scammed.

Unusual Urgency

If you check most of the scams we demonstrated above, you'll notice that one of the common tactics scammers deploy is to create an unusual sense of urgency in the victim. This tactic is to ensure victims have no time to think or double check what's going on.

Once you start hearing a statement like "I can't [do something] until I pay the bill/unless you send money," there's a likelihood that you're being targeted.

Unnatural Tone or Language

After all, AI is not a real person, so it is not 100% perfect.

For example, as real as the voice created by AI voice cloning or in deepfake videos may sound, you'll still notice some speech patterns that feel off and unnatural. Even at times, the AI-powered bots may give responses that are too generic and strangely repetitive.

Inconsistencies in Communication

Beyond unnatural tone and language, AI-driven technologies also struggle with communication. Even though AI can mimic a person's face or voice, it often struggles with continuity, especially over longer interactions.

Once you notice that someone's communication doesn't align with their usual behavior either during an audio or video call, you'd better trust your gut.

Unrealistic Visual Content

Most AI scamming techniques, especially those involving deepfake videos, often have unrealistic visual content, such as unrealistic backgrounds. For instance, the lighting doesn't match the scene in most AI fake videos. If something looks "off," zoom in to check it carefully.

Verification Resistance

One attribute of scammers, including those involved in AI scams, is that they always avoid scrutiny. As soon as you try to verify who they are, they become evasive because they don't want you to see through their fake identity. This is why setting a safe word in your family is necessary.

Protecting Yourself and Families from AI Scams

To protect yourself and your families from AI scams, follow and share the tips below:

Verify the Source Before Trusting

Even if the messages or videos made by AI look real, as long as they require you to send money or perform a sensitive task, ensure you verify the source. Doing this will definitely thwart the scammer's plan.

For example, if someone calls you with a number that appears to belong to a family member and demand money, try and hang up first. After that, call back their number you have saved in your phone, contact them on social media, or meet them directly.

Create Family Safe Words

You can also create a secret code or safe words that are known only among your family members. Whenever anyone pretending to be your loved one and claiming to be in an emergency call or demanding money, ask them to provide the family's safe word.

Once they fail, hang up and block the call. But remember, when creating the safe word, ensure it is unpredictable and make sure it is shared only in person among family members to keep it secure.

Be Cautious about Sharing Personal Info

When scammers are scouting for potential victims' information, they often check on social media and other public spaces. If you're the type that shares your personal information and your family memories on social media or other public spaces, you and your family are prone to being victims.

Set up Family Call Whitelists

You can also set up family call whitelists to prevent AI scams from unknown numbers. This ensures only approved contacts can connect with you or your loved ones.

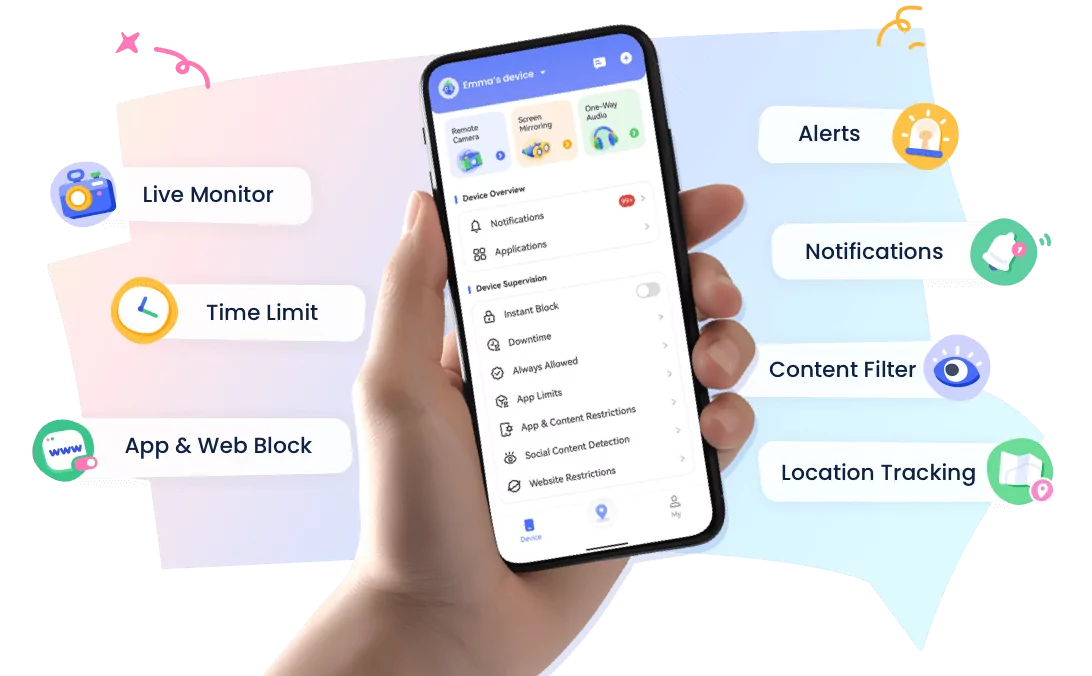

To set up a call whitelist for your child or elder, you can use the built-in call blocker or AirDroid Call Monitor.

AirDroid boasts a call whitelist mode that only allows emergency and whitelist numbers to reach out to your kids. Plus, it can detect keywords related to scams in your kids' social media and notify you instantly. All these features make it hands-free to spot and fight against AI-driven scams.

Contact Police If It's an Urgent Case

If the case is urgent (such as a kidnapping) and you think you don't know what to do at the time, you can simply report it to the police. Police are trained to identify the reality of the case, handle fraud cases, and they can guide you on the next steps.

What to Do If You or Your Families Are Scammed

If you and your families have already fallen victim to an AI scam, don't fret. Here's what you should do.

- Contact Your Financial Institution: Once scammers know you've fallen victim, their next move is to empty the fund in your bank account. To prevent such, you must quickly contact your bank. They can guide you on how to block unauthorized transactions and secure your account against further attacks.

- Report the Scammer: Once you've already been scammed, gather every relevant evidence and report to the appropriate authorities to see if they can catch the scammer and get your loss back.

- Inform Others: Sometimes, scammers can continue their exploit by impersonating you or using your accounts to target your friends or relatives. So, tell them not to trust any call, message, or request that appears to come from you.

Conclusion

Since the rise of AI, AI voice cloning, deepfake media, and AI-powered phishing emails have become much easier for scammers to target families. Fortunately, AI also comes with flaws.

So, learn about the common types of them and follow some of the tips we've shared in this article, and you can rest assured that you and your family can never be a victim of this scam. To be on the safe side, it is best you try scam detector apps and parental control apps like AirDroid.

Leave a Reply.